By using the term ecology, I mean the study of the interaction of people with their environment: the environment of human awareness and knowledge.

I think that most people, feel that they are aware of their surroundings. Psychologists say that because you feel as though you are aware, you assume everyone else is as aware as you; more or less. Unfortunately, it does not turn out that way. There are a host of ill effects because there seems to be very little in our awareness that any few people can agree upon. The lack of shared knowledge, the lack of shared intelligence, have an affect on the different level or type or kind of awareness in societies of people everywhere. If everyone were (explicitly) conscious of one and the same thing, then we could say that everyone is conscious of such and such. But we cannot make such a statement or claim in this day and age. A day and age of modern communications, computers and “open information” mind you.

Nonetheless human beings are modelers in this world or environment in that we build or construct models of it that suit us or satisfy us either by explaining or predicting the circumstances in which we find ourselves. I should say that I take it for granted that there are both good and bad models. I want to introduce you to a good model of the organism of intelligence (mentioned in my last post) that each of us use, even though most of us are not very conscious of it. I expect that anyone can tell a good model from a bad one. A good model is one that stirs or moves your awareness. It affects you in such a way as you are disposed, obliged even, to pay closer attention, as it obliges one to think more exactly about someone or something; it is one that warrants becoming more aware of it; conscious of it, learning it: ultimately using it for enlightenment and for gain.

A model M is equivalent to a knowledge K. M=K because we employ models in making predictions about certain attributes just as we employ our knowledge. The term “attribute” is used here as a noun in an ordinary way to signify a quality or feature regarded as a characteristic or inherent part of someone or something. Every environment has attributes that are characteristic of it.

For example, the ecosystem is an environment that has the attributes of air, water, earth and fire. The goal is to find just those attributes (and no more) that are enough to quantify the valuable or significant changes that make a substantial difference; affect our surroundings in some way. That is, to generate or induce knowledge and awareness we must perform a transformation: we must transform (what is recognized to be) an attribute of the environment into a personal or individual affect. That may sound strange, so let me explain it a little further.

In the case of the ecosystem, the attributes air, water, earth and fire can affect us, and one might readily imagine how the presence or absence of water or air can induce different states of mind. In any case, they may be the cause of some serious condition that could affect any one of us; imagine the situation where there is no air to breath. This quality makes air a good attribute of this environment (the ecosystem) because we can readily imagine and predict how we could be affected given some arbitrary change in the situation. But: — are these attributes sufficient and all that is necessary to predict all possible changes in the environment that might affect us?

Imagine now, how difficult it must be for scientists, for anyone, to build a model of the environment of human knowledge, awareness and consciousness. In some circles of research, that is what AI and AGI engineers are trying, have been trying to do. It is true the engineers and programmers have not been up to the daunting task of it. Yet that does not diminish the fact that it is what needs to be done in order to produce an AGI, after all: we need to be able to model our own situational awareness. By doing so, we may become better equipped to anticipate and reduce the affects of unwanted and harmful eventualities of which many people are all too aware.

For example, economists create models of economies with certain attributes and premises. For better or worse, this is done in order to deduce conclusions about possible eventualities. Economic models are useful as tools for judging which alternative outcomes seem reasonable or likely. In such cases the model is being used for prediction. Thus the model is part of some knowledge about the environment.

The model embodies the knowledge because it is itself a capacity for prediction. Thus, a model M can be considered to be fully equivalent to a knowledge. Therefore we can assume here that a model is synonymous with a knowledge. More specifically, it appears that a qualitatively relative definition of knowledge is warranted: “A Knowledge K is a capacity to predict the value which an attribute of the environment will take under specified conditions of context.”

Now let’s talk about people (sapients) and frame a model of their environment, that is, the environment of their awareness; of which they are aware (sapient). We can assume that everyone’s awareness changes in regular and predictable ways and each person has some knowledge that allows them to predict the value of attributes in their own awareness. Here, as you see, an awareness is equivalent to the environment in which we abide. We are intuitively surrounded by or abiding in the environs of sapience.

Before I begin the example let me reveal that I have a knowledge of the attributes of a denotative awareness that includes and subsumes all possible connotative environments. I will say there are eleven attributes to this environment of awareness but I will only introduce two of them we call “Self” and “Others” in this example. Like all the attributes of this rather explicit awareness, these two attributes, Self and Others, correspond with the real entities and their activities, self and others, in the world of ordinary affairs and situations. I am only using these two in order to keep the explanation simple and real and because that is all that is necessary to demonstrate the meaning of intelligence, which I will now define as: the organism or mechanism of the attributes of the environment to affect awareness.

So, to be clear, I am not going to give the complete specification of that organism or mechanism here, but I will show you how two of the attributes of the environment I have clearly in mind “affect” both my predictions and yours. Incidentally, let me also define a “mind” as a (psychical) state space (e.g. abstract and mental space). So we begin with an assertion: Besides my own self, there are others in my environment; the environment in which I exist and of which I am aware.

I embody the organism we call intelligence (as do you) and I have a knowledge K to predict that the value of a single measurement of the attribute Others, equivalent to and connotative of “wife” will be Gloria, just in case I am asked about it. This prediction is observed to be a transformation of the state space of the attribute Others, just like the state space of the attribute Self. Under the specified conditions and in the context of my own environment, the state space is transformed, by my own knowledge K to be equivalent to my name=Ken. Under the same specified conditions of context: the connotative context “my wife” is connected to the denotative context (observable yet normally left tacit or unemphasized) by taking successive measurements (e.g. making interpretations) of these explicitly shared attributes of the environment of my awareness. I believe that once consumed, that much ought to become clear and self-evident, that is: I take it as being axiomatic.

I can also predict that additional measurements of the attributes Self and Others will yield different values equivalent to the connotative appearance of several other self-organized entities, things or activities, that become salient to my own environment from time to time. In this way (and only in this way) my Knowledge K is different than your knowledge T. It is peculiar to my thoughts and perceptions in the context of the environment situated where I live, i.e; to my awareness of that environment. You will have a similar situation –your own “context” (the particular circumstances that form the setting for an event, statement, or idea, and in terms of which it can be fully understood and assessed) of the environment of your own awareness. We don’t know each others knowledge or awareness. We (may only implicitly) know and share the explicit attributes of such a (sapient) awareness.

That is to say that I live in the same environment (of general awareness and sapience) as you. And I have a knowledge K of Self and Others, as attributes of this environment that (the relevance or significance of which) you may only now be becoming aware of. Both Self and Others are clearly attributes in our shared awareness. In fact, they are attributes of a universal environment for homo sapients. Remember that a knowledge T, K, …, or M is a capacity to predict the value which an attribute of the environment will take under specified conditions of context. Everyone has their own name, knowledge (whether implicit and explicit) and their own conditions of context. This is the private knowledge held inside them and perhaps also by relatives and friends.

Now we are able to make some observations and see some of the implications that flow from what has been stated above. We can intuit, for instance, that a wholesome knowledge K is evidenced whenever an organism produces information or reduces a priori uncertainty about its environment. I realize this is incomplete, though it demonstrates that (connotative and social) knowledge, text and all computer data is synthesized from (the transformation of) valid attributes A, which cannot be construed as being contained in or patterned by (computer) data nor by modern language.

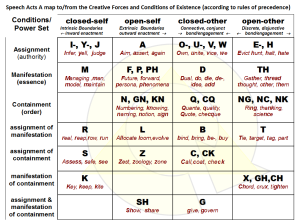

Any invariant or regular and unitary attribute A (whereby individuals are distinguished) ought be seen as a continuity to be treated as valid– and used as a handy and trustworthy rubric for making or producing transformations (in the state space of a mind) applied in a context of the environment. Each measurement produces a single valuation, that could be the same or different at any moment and from place to place –only appearing to be impossibly chaotic or complex. For those that understand such things, such an attribute may be considered a correspondence. This correspondence may be formalized as a functional mapping of the form A: Ɵ → Ɵ where Ɵ is the (denotative) state space of the environment mapped to the (connotative) state space of the environment. We found more than a dozen types or configurations of functional mappings that are applied in variant connotative contexts.

So, to conclude: an environment of human awareness can be understood simply as the denotative and connotative surroundings and conditions in which the organism of the attributes (and capacity of independent awareness with a knowledge) operates, is asserted and is applied.

The good news is that now that we know that it is the organism of the attributes of the environment of awareness, consciousness, that is both explicit and universal (not connotative belief, knowledge or perception or conception –which are all relatively defined) we can get down to resolving differences while accommodating everyone. To be specific, we can seek better understanding and control over perceptual and conceptual states of awareness in a decidedly invariant environment (awareness) of continuous change, where intelligence is any organism or mechanism of the attributes of an environment that affects such awareness and consciousness.

______

We can also define semantics as the correspondence of both the denotative and connotative states of conception to the set of all possible functions given the attributes of the environment. Now, if you want to know more details you will need to put me on a retainer and pay me.